Ptolemy D. W. Banks, Department of Psychology, University of Warwick, Coventry

Briefing a participant is necessary to obtain informed consent. However, briefing can change a participant's behaviour; for example, knowledge of an upcoming memory test might cause you to attend to experimental stimuli more so that you look clever in the test. Studies that try to measure natural behaviour therefore use deception to avoid this behaviour change. However, this merely flips the problem from a methodological one to an ethical one. This paper guides future research of a potential solution: myriad-briefing is a technique of presenting a collection of possible procedures to ambiguate the nature of the experiment so that participants consent to the experimental procedure without knowing what the experiment will involve. Before more extensive investigation of the application of myriad-briefing, this paper investigated two salient concerns. Part 1 collects feedback from participants about myriad-briefing to see if presenting more procedures discourages participation. Results find no negative effect of myriad-briefing on participant interest. Part 2 tests whether participants pay attention to myriad-briefing in online studies. Results find that too few participants read the briefing to produce an observable effect, suggesting that myriad-briefing should be tested and applied to in-person experiments only.

Keywords: Briefing, informed consent, participant awareness, confounding results, deception, myriad-briefing

Ethical protocol asks that researchers brief participants about an experimental procedure before collecting data (American Psychological Association, 2017; The British Psychological Society, 2017). However, most psychology research recruits psychology undergraduate students who are educated in the field (Gallander Wintre, North and Sugar, 2001; Levenson, Gray and Ingram, 1976), so participants may be able to deduce what the experimenter is trying to investigate from the procedure. This is problematic because this awareness can change behaviour; for example, eating behaviour significantly changes when participants know their food intake is a measured variable (Robinson, Kersbergen, Brunstrom and Field, 2014). When attention influences the dependent variable in this way, the variable is confounded. To avoid this, researchers are forced to deviate from ethical code and deceive participants with a cover story. A cover story is a fictitious procedure told to participants to avoid them knowing the study aims and changing their behaviour. Thus, protecting the variables comes at the cost of ethical practice.

An example of this would be one study by Nairne, Thompson and Pandeirada's (2007) investigated factors that affect implicit learning of words. They asked participants to rate words, gave participants a distractor task, and then provided a surprise word-recall test. They did not inform the participants of the word-recall test during briefing because awareness of the test may have encouraged participants to focus on remembering words. This would mean measuring explicit learning rather than implicit learning. Awareness-sensitive variables lead to a prevalent use of deception in research (Davis and Holt, 1992; Gross and Fleming, 1982). The continuous use of deception is arguably leading to a bad reputation that psychologists are deceitful (Ledyard, 1995; Hey, 1991).

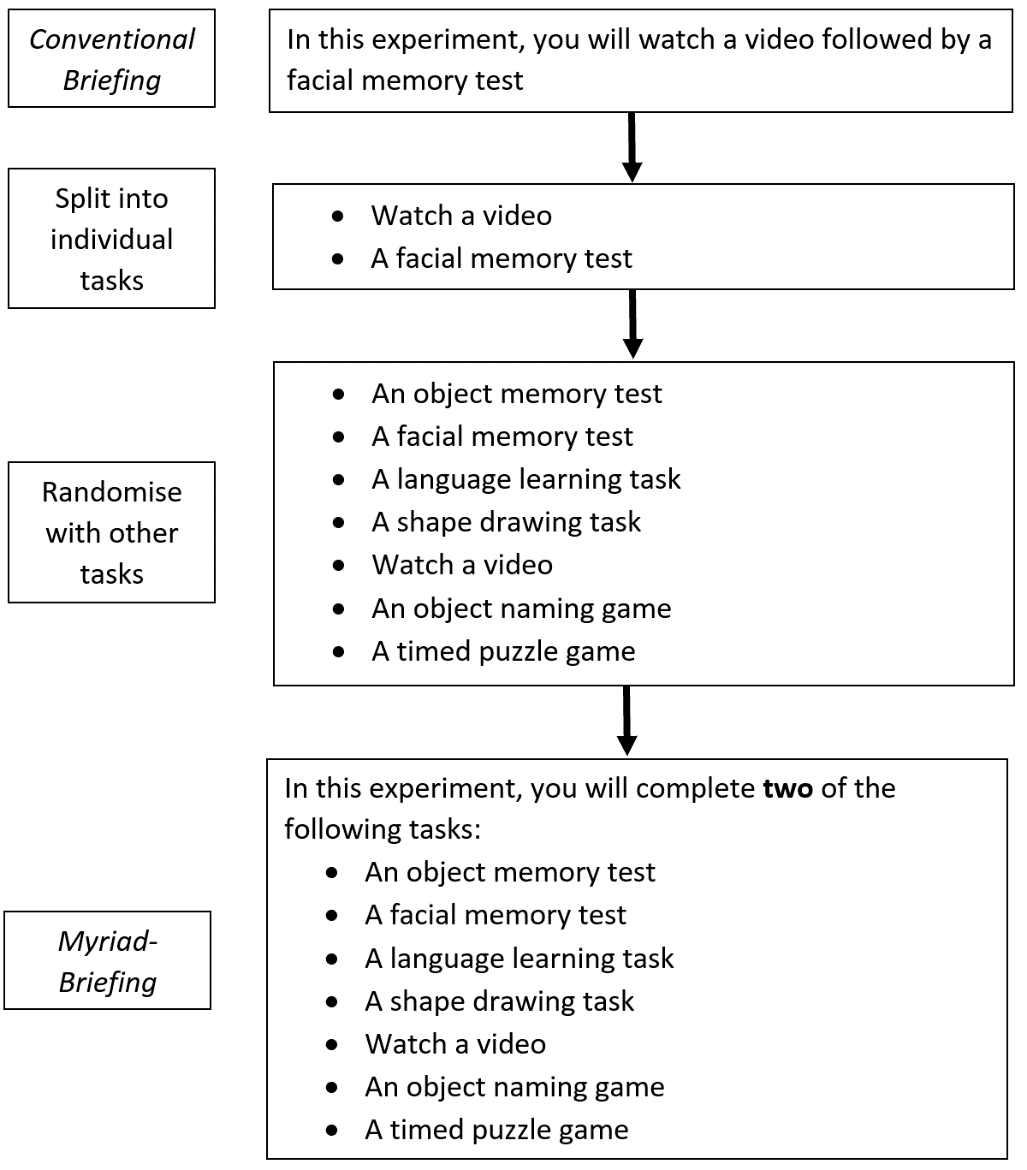

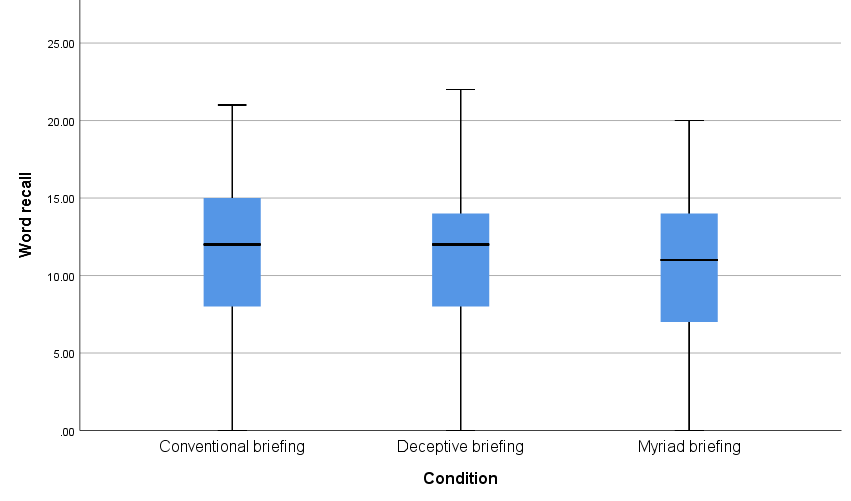

To resolve this, the author of this paper has designed myriad-briefing: a novel way to brief participants more ethically while avoiding deception. For myriad-briefing, a conventional briefing is simply split into individual tasks, then randomised with several other 'distractor tasks' (see Figure 1). The participant is told the number of tasks they will carry out but not told which tasks or what order.

Participants are presented with the real procedure amongst several distractor procedures, but they read and consent to all of them. Thus, the experimenter has obtained informed consent to do the real tasks, consistent with ethical code, but the multitude of presented tasks creates a myriad of possible procedures. With so many possibilities of what the experiment may involve, participants do not know what to focus on. As far as the author is aware, this method of briefing has never been investigated despite its potential application to many studies.

A full review of the methodological and ethical considerations of briefing, and an experiment to verify the effectiveness of myriad-briefing are currently underway. This paper describes an online pilot study with two parts to explore two preliminary concerns:

The first concern is that myriad-briefing shows participants a larger number of procedures compared to conventional briefing. Therefore, with myriad-briefing, participants may perceive the experiment to be arduous and become unwilling to participate. Part 1 investigates participants' attitudes towards myriad-briefing using qualitative and quantitative measures with the aim to determine whether myriad-briefing has a negative effect on willingness to participate. Participant feedback was also obtained to improve myriad-briefing for future research.

The second concern is that research suggests that most participants do not read online consent forms, including briefing (Perrault and Keating, 2018; Knepp, 2014; Varnhagen et al., 2005). This suggests that myriad-briefing is redundant in online research because the briefing is ignored. Part 2 investigates whether different briefing techniques have any effect on performance online, and whether the briefing is read by participants online. The aim of this part is to determine whether online research is an appropriate context to test the effectiveness of myriad-briefing, and to further explore its applications.

All 238 participants were recruited from an online participant pool at the University of Warwick (SONA cloud-based subject pool) and completed both parts online via Qualtrics for a chance to win £10. By using the university participant pool, the sample was representative of a sample typically used in research at the institution. It is likely the participants were experienced in online studies.

Demographic data collected showed 136 (57%) were female, 81 (34%) male and 21 (9%) did not disclose. No other demographic data was deemed relevant. Of the 238 participants, 40 (16.8%) did not complete all parts of the experiment and were excluded from the data. Attrition analysis suggested no noteworthy findings of when participants dropped out, or what condition they were in.

The experiment lasted 12 minutes on average. This study is consistent with British Psychological Society code of ethics, and the protocol was approved by the Department of Psychology ethics committee at the University of Warwick before enrolment.

Myriad-briefing may dissuade participants from taking part in a study because it presents more procedures compared to conventional briefing. Participants may see the list of tasks and drop out before seeing that they only do a few. To see if myriad-briefing is perceived more negatively than conventional briefing, participants were given hypothetical briefing forms and asked to provide qualitative and quantitative feedback.

This study also investigated whether the difficulty of procedures as well as the number of procedures has an effect on participant interest. Different groups were presented with different workloads. It was hypothesised that participants would be more interested if the distractor procedures had a lower workload, i.e. shorter/easier task.

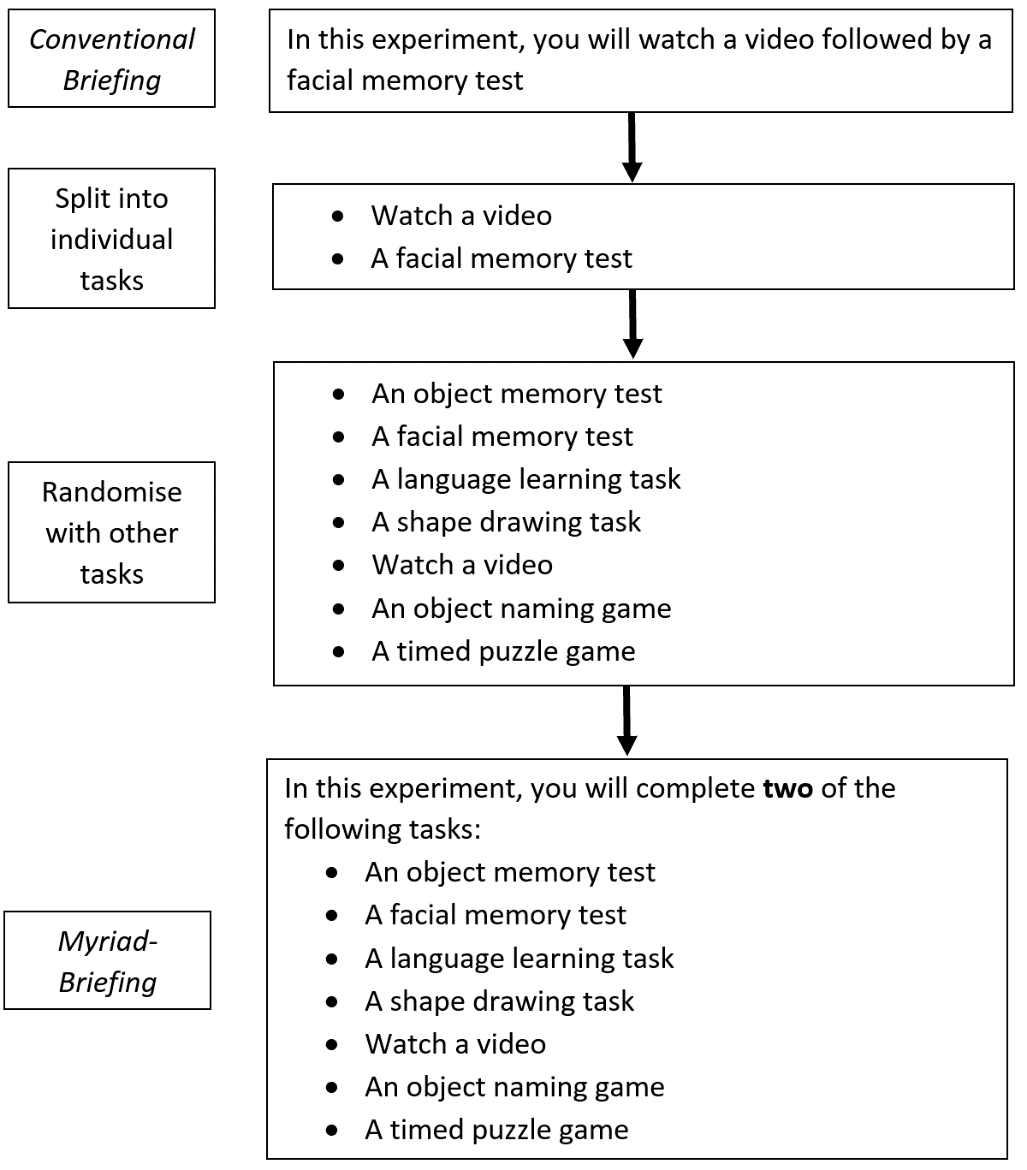

Participants were randomised by a computer-generated sequence into one of four groups in a 1:1:1:1 ratio. Each group was presented with a different briefing. One group saw a conventional briefing (only mentioning a word memory test; n = 41). Three groups saw myriad-briefing; each of the myriad-briefing groups saw the same kind of tasks, but with a low (n = 56), medium (n = 43) or high (n = 55) workload using different quantifiers. Below are the tasks presented, with the low, medium and high quantifiers in brackets respectively.

A simple three-item scale was constructed, including 'how likely would you participate in the above experiment if you had the time?' (a neutral question), 'how likely could you find the above experiment boring?' (a negative question) and 'how likely could you find the above experiment enjoyable?' (a positive question). Each question was on a six-point scale between 'extremely unlikely' to 'extremely likely'. Having one neutral, one negative, and one positive question balanced the neutrality of the scale. The order of questions was also randomised to avoid systematic order effects. The same scale was used across conditions.

For quantitative analysis, the six-point scale was given a numerical value from 'extremely unlikely' to 'extremely likely' (-3, -2, -1, 1, 2, 3). The question about likelihood of boredom was reversed (+3 to -3). Scores of all three questions were then summed and the mean sum of each condition was compared. Additionally, there was a text entry box where participants were asked to 'please express any opinions about the above study that you may have as a participant' for qualitative analysis. Thematic analysis was performed on comments relevant to the presentation of procedures.

For the quantitative analysis, a Kruskal–Wallis Test found no significant difference between groups for the rating scale (χ2(3) = 2.653, p > .05), seen in Figure 2. Firstly, this suggests that participants who saw the myriad-briefing were as interested in the hypothetical study as participants who saw the conventional briefing. That is to say, a larger number of procedures on the consent form did not discourage participant interest. Secondly, this suggests that participants who saw more difficult tasks were as interested in the hypothetical study as participants who saw the easier tasks. That is to say, the workload of procedures on the consent form did not discourage participant interest.

These findings are, however, limited by the unreliability of data collected online; participants may complete the questions with little or no consideration to minimise the time they spend doing the experiment. Without an experimenter present, there is little motivation to take time giving sincere answers.

For the thematic analysis, no participants gave any relevant comments on the conventional briefing. For all the myriad-briefing groups, there were positive comments of interest and curiosity about the multiple potential procedures, but many were qualified by a negative statement suggesting that there were too many tasks to complete. For example, 'too many tasks', 'way too much to do for one experiment' and 'interesting but challenging'. This suggests participants misinterpreted the briefing and believed that all the tasks must be completed. Some participants did complain of 'too [many] words' in the briefing. This suggests that myriad-briefing should make it absolutely clear that there are only a limited number of tasks to do.

Overall, the results of this experiment do not suggest that myriad-briefing compromises participants' interest. However, the results are not reliable enough to clearly dismiss this concern. This paper, therefore, recognises a need to further investigate the effect of myriad-briefing on willingness to participate in a more reliable way than online. Additionally, the qualitative feedback provides valuable insight to improve how myriad-briefing is phrased to participants.

Myriad-briefing could be an effective way to obtain consent without confounding variables but the technique must be reliably tested before being applied to research methodology. Testing myriad-briefing online could require less time and obtain a larger sample than experimenting in person, but literature suggests that extremely few participants read online consent forms (Perrault and Keating, 2018; Knepp, 2014). If participants ignore the consent form, the technique is no different from conventional briefing or even no briefing at all. Therefore, this part explores whether myriad-briefing should be evaluated through online research or not.

In this part, participants are presented with an online version of the Nairne, Thompson and Pandeirada's (2007) implicit learning study. Different groups are presented with different briefing techniques and the effect on learning is compared. In theory, conventional briefing informs participants of the upcoming memory test and results in higher learning due to greater effort to learn the stimulus words. But in practice, it is hypothesised that participants in all conditions ignore the briefing and there is no difference in learning.

As well as the effect on learning, to confirm whether participants read the briefing, participants were also tested on what tasks they saw in the briefing form. Therefore, it was also hypothesised that participants would ignore the online briefing and fail to recall contents of the briefing form.

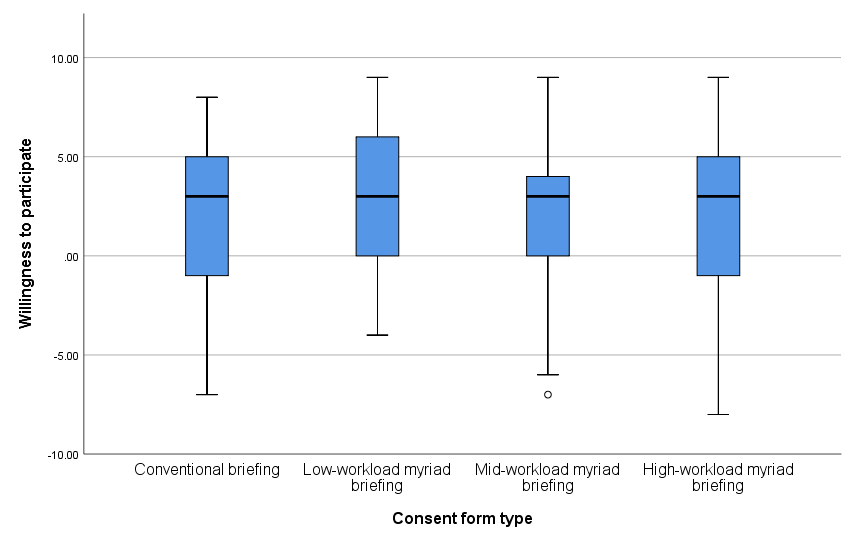

Participants were randomised by a computer-generated sequence into one of three groups in a 1:1:1 ratio. Groups were either briefed conventionally (n = 64) whereby the word-recall test was explicitly stated, deceptively (n = 63), which mentioned the word-rating task without mention of a word-recall test, or with myriad-briefing (n = 72) whereby the tasks were randomised in a list of other distractor procedures. After briefing, there was a word-rating task, a distractor task and a surprise recall test of the words:

Word-rating task: all participants rated 30 words according to their relevance to a hypothetical situation. All participants were given the same hypothetical situation and saw the same words in the same order for five seconds each. Words were rated between 1 to 5 for relevance to the hypothetical situation (1 = not relevant; 5 = very relevant). The purpose of this task was simply to expose participants to the words; the ratings served no purpose. The scenarios and words were taken from the original Nairne, Thompson and Pandeirada (2007) study.

Distractor task: participants performed a digit-recall task twice. In each round, seven single digits flashed sequentially on screen for one second each, then participants were asked to recall the sequence. The sequences were the same for all participants.

Surprise recall test: participants were asked to write as many words from the word-rating task that they could remember. Words were accepted with simple spelling errors (e.g. 'truk' instead of 'truck') but not semantic errors (e.g. 'lorry' instead of 'truck'). Some participants spent longer trying to recall words. To control for the unequal time/effort that participants put in, the number of words accurately recalled was divided by the time spent on the recall page of the survey. This gave a words-recalled-per-minute variable, which was compared between groups.

Before being debriefed and completing the study, participants were shown a list of 14 tasks in the same arbitrary order. They were asked to select the tasks that they saw earlier in the briefing form. Accuracy of how many were correctly selected/correctly left unselected was out of 14.

Using a Kruskal–Wallis Test, there was no significant difference in word recall between groups (χ2(2) = 0.229, p > .05). This means that participants who were told of the word-recall test performed no differently than participants who were not told. This suggests that participants in each group paid little attention to the briefing and no group benefited as a result. This is consistent with the literature (Perrault and Keating, 2018; Knepp, 2014). An online study, therefore, is an inappropriate context to test the effectiveness of myriad-briefing because participants ignore the consent form, resulting in no observable effect.

In support of this claim, participants in the myriad-briefing group had an average accuracy of 4.8 out of 14 when recognising what procedures they were briefed with. This means that, on average, participants failed to recognise, or falsely recognised, nine procedures that they saw minutes earlier during the briefing. Taken together, the lack of difference between groups in word recall and the poor recognition of the briefing suggests little attention was given to the briefing form. Investigations into the application of myriad-briefing should focus on in-person research.

There is the possibility that the measures were not sensitive enough. Perhaps briefing does influence implicit learning, but the experiment failed to detect it. It is worth noting that the data was not normally distributed and there was huge variation in performance, which creates a lot of 'noise'. To best evaluate myriad-briefing as a methodology in a future study, more controlled laboratory measures should be employed for clearer data. An open-ended recall may have been more appropriate as this avoids participants getting some right due to chance.

This paper introduces myriad-briefing as a concept technique of briefing participants without compromising ethics or methodology. To be employed in research, myriad-briefing must first be tested as an effective solution. The experiment described in this paper has provided valuable insight into how myriad-briefing can be improved and the best way to test its effectiveness.

One preempted concern of myriad-briefing was that presenting more procedures may discourage participants. Part 1 found that a larger number of procedures did not influence the interest to participate in a hypothetical study, nor did the workload of the procedures. Although one negative finding cannot disprove there being an effect, the results encourage further testing of myriad-briefing. Additionally, the feedback will be used to enhance the phrasing of myriad-briefing to minimise ambiguity.

A second preempted concern was that evaluating myriad-briefing online may be a waste of resource because participants often ignore the online briefing (Perrault and Keating, 2018; Knepp, 2014). To confirm this, Part 2 found that there was no effect of briefing on a variable thought to be 'awareness-sensitive', and participants failed to accurately recognise what they were briefed with. This finding has two wider implications: firstly, it replicates and reiterates the issue that informed consent is rarely obtained in online research. Given the increasing number of studies conducted online, developing new ways to encourage paying attention to the briefing is increasingly important. Secondly, it suggests that myriad-briefing will be redundant for online research even if future research proves it effective for in-person research.

A common limitation of the two parts of this paper is that online research provides unreliable and noisy data, although this has been insightful; this paper prescribes that a future study evaluates myriad-briefing with more sensitive measures in a face-to-face experiment to motivate participants to engage. The author is currently collecting data for an experiment of this nature and writing a more in-depth review of the potential benefits of myriad-briefing.

This research was supported by a grant from the Institute of Advanced Teaching and Learning at the University of Warwick. I thank Dr Adrian von Mühlenen for his guidance throughout this project, I thank Dr Spencer Collins for his invaluable support, and I thank the peer reviewers for their thoughtful suggestions.

American Psychological Association. (2017), 'Ethical principles of psychologists and code of conduct', available at https://www.apa.org/ethics/code/ethics-code-2017.pdf, accessed 01 June 2019

Davis, D. D. and Holt, C. A. (1992), Experimental Economics, Princeton, NJ: Princeton Univ. Press

Gallander Wintre, M., North, C. and Sugar, L. A. (2001), 'Psychologists' response to criticisms about research based on undergraduate participants: A developmental perspective', Canadian Psychology/Psychologie canadienne, 42 (3), 216–25

Gross, A. E. and Fleming, I. (1982), 'Twenty years of deception in social psychology', Personality & Social Psychology Bulletin, 8 (3), 402

Hey, J. D. (1991), Experiments in Economics, Oxford: Basil Blackwell

Knepp, M. M. (2014), 'Personality, sex of participant, and face-to-face interaction affect reading of informed consent forms', Psychological Reports, 114 (1), 297–313

Ledyard, J. O. (1995), 'Public goods: A survey of experimental research', in Kagel, J. H. and Roth, A. E. (eds.), The Handbook of Experimental Economics, Princeton, NJ: Princeton Univ. Press

Levenson, H., Gray, M. J. and Ingram, A. (1976), 'Research methods in personality five years after Carlson's survey', Personality & Social Psychology Bulletin, 2 (2), 158

Nairne, J. S., Thompson, S. R. and Pandeirada, J. S. (2007), 'Adaptive memory: Survival processing enhances retention', Journal of Experimental Psychology: Learning, Memory, and Cognition, 33 (2), 263–73

Perrault, E. K. and Keating, D. M. (2018), 'Seeking ways to inform the uninformed: Improving the informed consent process in online social science research', Journal of Empirical Research on Human Research Ethics, 13 (1), 50

Robinson, E., Kersbergen, I., Brunstrom, J. M. and Field, M. (2014), 'I'm watching you. Awareness that food consumption is being monitored is a demand characteristic in eating-behaviour experiments', Appetite, 83, 19–25

The British Psychological Society. (2017), 'Code of human research ethics', available at https://www.bps.org.uk/sites/bps.org.uk/files/Policy/Policy%20-%20Files/BPS%20Code%20of%20Human%20Research%20Ethics.pdf, accessed 1 June 2019

Varnhagen, C. K., Gushta, M., Daniels, J., Peters, T. C., Parmar, N., Law, D., … and Johnson, T. (2005), 'How informed is online informed consent?', Ethics & Behavior, 15 (1), 37–48

Informed consent The consent given by a participant to take part in a study in full knowledge of the potential outcomes.

Kruskal-Wallis Test A statistical analysis for comparing two or more independent samples when distribution is not normal.

To cite this paper please use the following details: Banks, P.D.W (2020), 'Myriad-briefing: A pilot study into its effect on participation and its appropriateness for online research', Reinvention: an International Journal of Undergraduate Research, Volume 13, Issue 1, https://reinventionjournal.org/article/view/488. Date accessed [insert date]. If you cite this article or use it in any teaching or other related activities please let us know by e-mailing us at Reinventionjournal@warwick.ac.uk.