Abstract

This paper explores the evolving role of Artificial Intelligence (AI) in primary education, highlighting its potential to personalise learning, alleviate teacher workload and enhance student outcomes. It critically examines the integration of AI technologies, ranging from intelligent tutoring systems to voice-activated assistants, and their implications for pedagogy, student wellbeing and digital literacy. While acknowledging AI’s transformative capabilities, the paper similarly addresses ethical concerns, including data privacy, bias and overreliance on automated systems. Drawing on current research, educational policy and practical examples, it advocates for the responsible adoption of AI guided by informed teacher judgement and robust digital literacy education. Ultimately, it calls for collaborative efforts among educators, technologists and policymakers to ensure AI enriches learning without compromising human values or professional integrity.

Keywords: Artificial Intelligence (AI) in primary education, AI and personalised learning, Digital literacy in children’s learning

Technology is increasingly becoming a necessity in our world as we adapt ourselves to adopt its nature (Worth, 2024). This codependency is evident among young learners who seamlessly navigate their lives both online and offline (Department for Education (DfE), 2023), generating a staggering average screen time of six hours per day for children in Key Stage 2 (Binns, 2024). As one of the most promising forms of technology today, Artificial Intelligence (AI) has created exciting possibilities within the education system as it strives to remain relevant (Major et al., 2018). Referring to various computer systems designed to emulate intelligent human behaviours such as reasoning and learning (Chen et al., 2020; Liang et al., 2021), AI offers remarkable potential to support children’s learning. In response to the COVID-19 pandemic, AI is progressively becoming integrated within education policy and practice (Chen et al., 2020), influencing classroom discourse (Tuomi, 2018). Seen as a transformative tool for crafting personalised learning experiences (Carroll and Borycz, 2024), AI is thought to revolutionise education (Patrick and Javed, 2024). However, others contend that its rise leads us towards a fully automated future based on a system that knows everything but understands nothing (Atherton, 2018). While AI is unlikely to replace teachers, it is crucial for educators to grasp both its potential and challenges (Worth, 2024) in light of its integration into the learning environment (Patrick and Javed, 2024).

For the potential of AI to be fully realised, its development and application must be conducted in a secure and responsible manner. Consequently, its use in schools requires careful consideration, not only to understand its potential in supporting children’s learning (Schroeder et al., 2022) but also to question the risks and uncertainties it introduces. From my experience as a student teacher, observations indicate that AI is being integrated with varying degrees of confidence and purpose, ranging from teachers experimenting with classroom tools to pupils engaging with automated systems almost instinctively. These encounters reveal a tension; AI is simultaneously fluid and unfamiliar, widely present yet frequently misunderstood. Across these contexts, two dominant positions emerge: educators who are hesitant to approach AI, often stemming from limited awareness of its possibilities, and those who embrace its integration with little restraint, at times to the potential detriment of their students’ wider needs. Despite these differences, both positions underscore a shared requirement, a comprehensive understanding of AI’s capacities, coupled with the critical scepticism required to maintain robust pedagogical practice. In response to this ongoing debate, strategies to support pedagogies that thoughtfully integrate AI will be examined, with implications for future educational practice.

Contrary to belief, utilising AI to enhance conventional routines is not a new concept (DfE, 2023), having held a role in supporting children’s learning for more than a decade (Luckin, 2023). However, with advancements in technology, its usage has become second nature, making the need to embrace such technologies in education evermore significant (Mitra, 2012). Paving a way to the future of education is a private school in London, which places AI at the forefront of their children’s education as opposed to human teachers. This decision for a teacherless class was made in reaction to the meticulous nature of AI, and utilises its ability to learn from students and tailor teaching to individual strengths and areas of development (David Game College, 2024). Although current success has been proclaimed in supporting children academically (Carroll, 2024), it could be viewed that a change this drastic does not fulfil the wider needs of all learners and undermines the role of the teacher (Butler and Starkey, 2024). Furthermore, given the pandemic’s negative impact on children’s mental health due to isolated online lessons (Worth, 2021), the question arises of whether educators have genuinely learnt from past mistakes. Although the teacherless class is set in a secondary setting, the need for schools to focus on children’s wellbeing is essential (YoungMinds, 2020); entrusting computers with full responsibility is not currently the solution (Nguwi, 2023). As witnessed during the pandemic, the absence of meaningful human connection left many learners struggling socially and emotionally, emphasising that technology alone cannot meet the holistic needs of children.

Mental health affects cognitive learning and development, highlighting the need to evaluate AI’s role in balancing wellbeing with academic achievement (Public Health England, 2021). Mitra (2017) acknowledges that with recent technologies, teachers need not play as central a role for educational progression. Instead, the success of learning hinges on collaboration among students while allowing them to work alongside technologies to discover knowledge. By creating a more fluid learning environment, AI challenges traditional educational structures that emphasise teacher-led instruction. AI tools can foster cognitive development in ways that Piaget’s (1926) model overlooks, challenging the idea of fixed stages by encouraging a more adaptable understanding of cognitive growth. However, parallels can still be drawn between traditional and AI-enhanced learning by promoting exploration as an active experience (Fiorella and Mayer, 2016). While not every lesson should be entirely student-driven (Mitra, 2017) – especially given the limited research on the long-term effects of these methods (Wilby, 2016) – evolving educational practices can create significant opportunities for development. Fundamental to this process is the empowerment of students, which AI enhances by providing access to technology, enabling exploration of own knowledge, and developing individual thought as opposed to rote learning, ultimately cultivating self-regulated learners (Luckin, 2023).

AI can significantly enhance classroom discourse and the learning experience in primary schools when implemented thoughtfully (Tuomi, 2018). One innovative approach involves AI-driven robots, which demonstrate how to create self-regulated learners without marginalising the role of the teacher (Jones and Castellano, 2018). In Sweden, specialist robots have already been used in various social roles within classrooms, including answering questions appropriately to maintain pupil confidence, such as in the EMOTE project (Serholt and Barendregt, 2016). While such advancements appear revolutionary, they come at a cost, having limited functionality prone to breakdowns due to insufficient development, explaining why not many social robots are used regularly in education (Selwyn, 2019). For many teachers, identifying the failings of robot use in education is a relief, finding it hard to overcome the clichés and fear-inducing stereotypes often perpetuated by the media (Atherton, 2018). However, interactions within digitally enhanced classrooms are evolving, with technologies increasingly becoming integral to teaching and learning (Selwyn, 2019). A more accessible form of robotics widely accepted and utilised includes voice-activated assistants, intelligent personal assistant devices, such as Amazon Echo and Google Home. If these generative AI devices are specifically designed with educational functions, they have the potential to serve as intelligent learning assistants that can significantly influence future classroom practices (Butler and Starkey, 2024). It is important to emphasise that this development should not diminish the role of teaching assistants, who carry out a variety of essential tasks beyond the capabilities of voice-activated devices – something that has been directly observed. However, the demands of this role can be high, particularly when students hesitate to progress until their specific needs are met. In this context, AI devices could help alleviate some pressures and enhance classroom discourse. Observations in practice demonstrate that when these devices are used with care and oversight, they can ease classroom management, although never fully replicate the nuance of human interaction.

To ensure suitability for primary classrooms, adapted algorithms must prevent inappropriate responses when students seek personal advice or reassurance, as children tend to anthropomorphise artificial entities to enhance their understanding of an unpredictable world (Epley et al., 2007). Thus, the ethical implications of designing educational technologies that invite anthropomorphism warrant careful consideration, alongside a critique of algorithms (Perrotta and Selwyn, 2020) and cultural biases, particularly when such technology is not expressly developed for educational purposes (Celik, 2023). Although these advancements are currently in development (Atherton, 2018), presenting AI as an educational tool should complement, rather than replace, human intelligence (Luckin, 2016). This approach enhances the learning experience, fostering greater interactivity and dynamism that promotes a culture of enquiry and empowers students to explore (Luckin et al., 2016). Consequently, before realising these benefits, it is essential to address misconceptions about robots among educators in order to fully harness AI’s potential to transform classroom discourse and create an enriching learning environment (Wang et al., 2024). Recent government guidance echoes this, underlining the importance of safe, transparent and human-centred integration of AI in schools (DfE, 2025).

To experience the transformative potential of AI in education, it is essential to embrace personalised learning approaches that cater to each student’s unique needs and preferences (Muthmainnah et al., 2022). By utilising data analytics and adaptive technologies, teachers can provide tailored learning experiences through AI that engage students through their interests and support individual growth trajectories (Pallai, 2023). This personalisation not only addresses varying learning styles (Hood, 2024) but also helps to identify and rectify misconceptions, enhancing student outcomes (Luckin et al., 2016). In school experience, examples of these tools have been embedded within classroom routine – with one of the most successful being Times Tables Rock Stars. This is a prime example of where AI can take an area of the curriculum with low retention and transform it into an enjoyable experience, motivated by friendly competition that enhances engagement and understanding (Patrick and Javed, 2024). AI’s ability to improve confidence and fluency can similarly benefit children with Special Educational Needs, including those with dyslexia (Reid, 2017). For instance, AI-driven applications such as Nessy offer personalised reading support, enabling students to develop their skills at their own pace, thereby fostering greater independence, social inclusion and overall learning capabilities (Giaconi and Capellini, 2020). While these applications encompass activities that a proficient educator might design (Luckin et al., 2016), the increasing time constraints faced by schools necessitate the use of AI to offer immediate feedback and tailored practice (Roberts, 2024). This real-time assessment allows students to appreciate progress and areas for improvement, ensuring that all learners feel empowered to engage fully in their educational journeys (DfE, 2024). However, while these innovations hold promise, disparities in access to AI technologies can exacerbate existing educational inequities, particularly in disadvantaged areas (Sperling et al., 2022). Furthermore, the effectiveness of these AI systems relies on fostering a culture of resilience and adaptability in students (Schroeder et al., 2022). Without a supportive learning environment, even advanced technologies may fail to realise their full potential (Dweck, 2012). Moreover, if children are consistently exposed to information delivered by a computer, their ability to critically assess and analyse the validity of what they are transmitted may be compromised (Dans, 2023).

Engaging in children’s digital literacy is essential when navigating the online world (DfE, 2023). With advancements in computer augmentation and fake news, identifying what is real and fake is increasingly becoming an issue – evidenced by the staggering statistic that one in five children believes everything they read online as true (National Literary Trust, 2018). Highlighting children’s inability to critically evaluate online information creates concern in a world evermore dependent on the internet as an information source (Leu, 2017). The technology’s capacity to generate misleading or false information can be exemplified by AI hallucination, where AI systems produce responses that are not grounded in reality (Burrett, 2024). The possibility of bias in AI-generated content and the circulation of misinformation present concerns for perpetuating stereotypes and undermining inclusive learning environments, posing significant ethical issues (Ardoin and Hicks, 2024). Therefore, it is important for teachers and children to be aware of the potential for bias in AI-generated content (DfE, 2023).

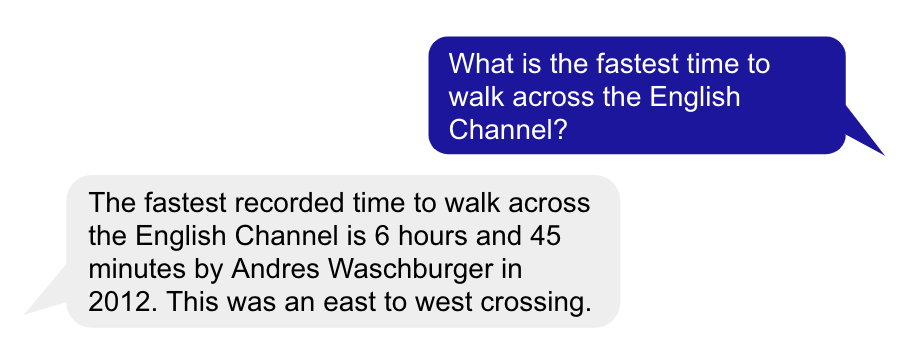

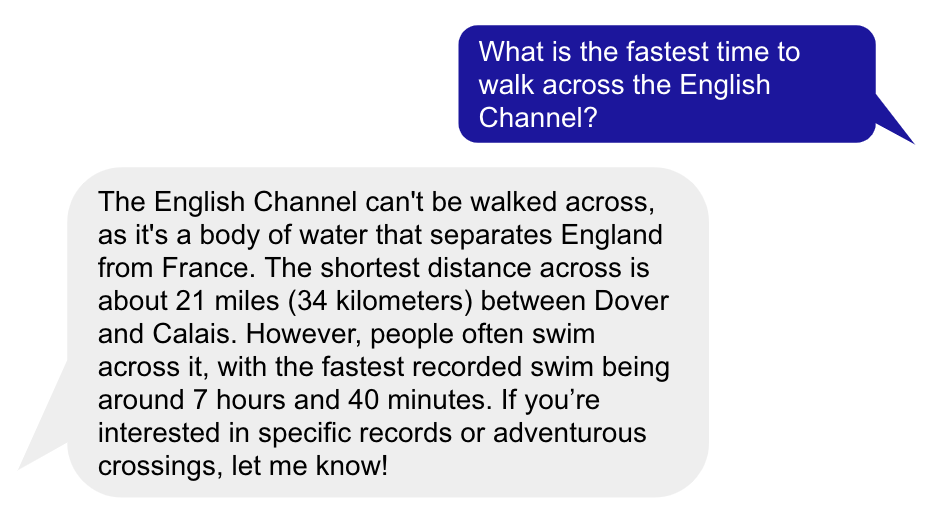

To contest this, it is commonly agreed that assessing the validity of knowledge requires children to develop a degree of healthy scepticism (Burrett, 2024; Dalton, 2015; Leu, 2017; Ofcom, 2022; Pilgrim et al., 2019). Developing reliable reasoning to determine truths on websites can be routed in credibility of the author, examining links and assessing the domain used (Pilgrim et al., 2019). Continuing to develop this critical thinking, determining AI hallucination can be trickier due to its enthusiastic and convincing responses (Ardoin and Hicks, 2024). However, if children know that AI is not always truthful, they are more likely to treat information with caution (DfE, 2023). To encourage this scepticism, Burrett (2024) suggests having children ask AI questions that are known lies, to which AI would produce a natural-sounding, but fabricated, response (Figure 1). Conversely, with some platforms, such as ChatGPT, significant advancements have been made, which can provide accurate information when asked the same question (Figure 2). Although accuracy could differ with other questions, it highlights the speed at which AI is developing, making distinguishing fact from fiction challenging. Therefore, for digital literacy to be developed, children should be exposed to big ideas both on and offline. Using philosophy for children assists with e-safety while encouraging critical thinking and exploring issues from multiple perspectives, minimising bias (Goto, 2022). By empowering children to engage thoughtfully with AI, teachers can foster a generation of learners who are sceptical in using technology but equipped to navigate the complexities of the future world they are helping to shape (D’Olimpio, 2017).

Figure 1: Answer generated from Microsoft Co-pilot in Bing

Figure 2: Answer generated from ChatGPT 4o

While it is crucial to equip children with the skills to navigate the complexities of the online world, it is equally important for teachers to recognise and embrace the benefits that AI offers themselves (D’Olimpio, 2017). Reports indicate that teachers are suffering from high demands, poor work-life balance and perceived lack of resources (Ofsted, 2019), with only 1 per cent feeling their workload is manageable (National Education Union, 2024). Contributing to current recruitment and retention challenges, generative AI could serve as a transformative tool to lighten administrative burdens, aiming to improve teacher morale and job satisfaction (Schroeder et al., 2022). However, a recent example showed that some teachers, despite recognising the benefits of AI, misuse it because they lack the training needed to use it effectively (Worth, 2024). Much about successful usage lies in the ability to write effective prompts to enable the domain to provide the most relevant results (Burrett, 2024). Such prompts should not include identifiable data, as anything inputted into AI gets permanently stored; these could include personal data and pupils’ work without consent, which could unethically train generative AI models (DfE, 2023). Furthermore, any responses gathered must be sense-checked against a schema, reducing the possibility of overreliance causing plagiarised outcomes (Pilgrim et al., 2019). By using AI to reduce time spent on non-pupil-facing activities, teachers can dedicate more attention to students requiring targeted support (Schroeder et al., 2022), enhancing their ability to deliver high-quality education (DfE, 2023). By balancing the advantages of AI with caution in its usage, educators can create an environment where technology serves as a powerful ally in supporting children’s learning (DfE, 2024). This thoughtful integration of AI enhances teacher effectiveness, leading to more meaningful learning experiences for students (Atherton, 2018).

In our current climate, where enhancing children’s learning experiences is paramount, integrating AI into education presents a compelling opportunity (Patrick and Javed, 2024). By alleviating teacher workloads and enabling personalised instruction, AI can create engaging and adaptive learning environments tailored to diverse student needs (Sperling et al., 2022). However, despite numerous studies advocating for AI’s inclusion in the classroom, only a small proportion of teachers have been observed practically applying and evaluating these technologies. This raises concerns that the speculative future of AI might overshadow our understanding of its real impact on students (Sperling et al., 2022). Nonetheless, given the Department for Education’s (2023) endorsement, ongoing global teacher shortages and drive for more efficient practices, the role of AI in teaching remains uncertain. However, it is important to emphasise that utilising AI is not about replacing teachers, but enhancing their capacity to meet varied learning requirements. As future educators, it is vital to recognise both the potential and pitfalls of generative AI, remaining mindful of the challenges it brings, such as data privacy and the necessity for adequate teacher training (Worth, 2024). As advancements in algorithms continue, it is essential to understand that the support AI provides for children’s learning should be guided by teachers’ professional judgement and knowledge of their students (DfE, 2023). Ultimately, this research has highlighted an urgent need for collaboration among educators, policymakers and technologists to harness the transformative power of AI, ensuring it enriches the educational landscape and prepares students for a dynamic future. In the meantime, however, it emphasises the teacher’s role to continue engaging with and exploring the multifaceted elements of AI education, approaching its integration with informed curiosity and a commitment to student welfare (Sperling et al., 2022).

References

Ardoin, P. J. and W. D. Hicks, (2024), ‘Fear and loathing: ChatGPT in the political science classroom’, Political Science & Politics, 2024 (4), 1–11, https://doi.org/10.1017/s1049096524000131, accessed 20 October 2024.

Atherton, P. (2018), ‘Artificial intelligence (AI) in education’, in Atherton, P. (ed.) 50 Ways to Use Technology Enhanced Learning in the Classroom: Practical Strategies for Teaching, London: Learning Matters, pp. 25–28.

Binns, R. (2024), ‘Screen time statistics 2024’, The Independent, Tuesday 18 June, https://www.independent.co.uk/advisor/vpn/screen-time-statistics, accessed 18 October 2024.

Burrett, M. (2024), ‘AI in education’, in Burrett, M. (ed.) Bloomsbury Curriculum Basics: Teaching Primary Computing, United Kingdom: Bloomsbury Publishing Plc, pp 175–81.

Butler, L. and L. Starkey (2024), ‘OK Google, help me learn: An exploratory study of voice-activated artificial intelligence in the classroom’, Technology, Pedagogy and Education, 33 (2), 135–148.

Carroll, A. J. and J. Borycz (2024), ‘Integrating large language models and generative Artificial Intelligence tools into information literacy instruction’, The Journal of Academic Librarianship, 50 (4), https://doi.org/10.1016/j.acalib.2024.102899, accessed 18 October 2024.

Carroll, M. (2024), ‘UK’s first ‘teacherless’ AI classroom set to open in London’, Sky News, Saturday 31 August, https://news.sky.com/story/uks-first-teacherless-ai-classroom-set-to-open-in-london-13200637, accessed 20 October 2024.

Celik, I. (2023), ‘Towards intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education’, Computers in Human Behavior, 138, 107–68, https://doi.org/10.1016/j.chb.2022.107468, accessed 26 September 2024.

Chen, X., H. Xie, D. Zou and G.-J. Hwang (2020), ‘Application and theory gaps during the rise of artificial intelligence in education’, Computers and Education: Artificial Intelligence, 1 (1), https://doi.org/10.1016/j.caeai.2020.100002, accessed 8 September 2024.

Dalton, B. (2015), ‘Charting our path with a web literacy map’, The Reading Teacher, 68 (8), 604–08, https://doi.org/10.1002/trtr.1369, accessed 9 September 2024.

Dans, E. (2023), ‘ChatGPT and the decline of critical thinking’, IE Insights, https://www.ie.edu/insights/articles/chatgpt-and-the-decline-of-critical-thinking/, accessed 8 October 2024.

David Game College (2024), ‘GCSE AI adaptive learning programme’, https://www.davidgamecollege.com/courses/courses-overview/item/102/gcse-ai-adaptive-learning-programme, accessed 20 October 2024.

Department for Education (2023), ‘Generative Artificial Intelligence (AI) in education’, https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education/generative-artificial-intelligence-ai-in-education, accessed 7 October 2024.

Department for Education (2024), ‘Use cases for generative AI in education user research report’, https://assets.publishing.service.gov.uk/media/66cdb078f04c14b05511b322/Use_cases_for_generative_AI_in_education_user_research_report.pdf, accessed 24 October 2024.

Department for Education (2025), ‘Generative Artificial Intelligence (AI) in Education’, https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education/generative-artificial-intelligence-ai-in-education, accessed 10 September 2025.

D’Olimpio, L. (2017), Media and Moral Education: A Philosophy of Critical Engagement, Abingdon: Routledge.

Dweck, C. (2012), Mindset: Changing the Way You Think to Fulfil Your Potential, London: Robinson.

Epley, N., A. Waytz and J. T. Cacioppo (2007), ‘On seeing human: A three-factor theory of anthropomorphism’, Psychological Review, 114 (4), 864–86 https://doi.org/10.1037/0033-295X.114.4.864, accessed 19 September 2024.

Fiorella, L. and R. E. Mayer (2016), ‘Eight ways to promote generative learning’, Educational Psychology Review, 28 (4), 717–41.

Giaconi, C. and S. A. Capellini (2020), Dyslexia: Analysis and Clinical Significance, New York: Nova Science Publishers.

Goto, E. (2022), ‘Digital literacy and philosophy for children’, Hello World, 19, 64–65, https://downloads.ctfassets.net/oshmmv7kdjgm/5zkHUMOcCFRiFwLG3oc1Gu/e98b0533f5b25fb682f54db9c38768ea/HelloWorld19.pdf, accessed 18 September 2024.

Hood, N. (2024), ‘AI is radically changing what is possible for SEND learners: Exclusive interview with co-founder of TeachMateAI, and Twinkl AI Lead’, Twinkl, https://www.twinkl.co.uk/news/ai-is-radically-changing-what-is-possible-for-send-learners-exclusive-interview-with-co-founder-of-teachmateai-and-twinkl-ai-lead, accessed 24 October 2024.

Jones, A. and G. Castellano (2018), ‘Adaptive robotic tutors that support self-regulated learning: A longer-term investigation with primary school children’, International Journal of Social Robotics, 10, 357–70.

Leu, D. (2017), ‘Schools are an important key to solving the challenge of fake news’, UCONN: NEAG School of Education, https://education.uconn.edu/2017/01/30/schools-are-an-important-key-to-solving-the-challenge-of-fake-news/, accessed 24 September 2024.

Liang, J.-C., G.-J. Hwang, M.-R.A. Chen and D. Darmawansah (2021), ‘Roles and research foci of artificial intelligence in language education: An integrated bibliographic analysis and systematic review approach’, Interactive Learning Environments, 31 (7), 1–27, https://doi.org/10.1080/10494820.2021.1958348, accessed 20 October 2024.

Luckin, R. (2016), ‘Why artificial intelligence could replace exams: Professor Rose Luckin speaks on Radio 4’, UCL News, https://www.ucl.ac.uk/news/headlines/2016/jun/artificial-intelligence-alternative-form-assessment, accessed 25 October 2024.

Luckin, R. (2023), ‘Yes, AI could profoundly disrupt education. But maybe that’s not a bad thing’, The Guardian, 14 July, https://www.theguardian.com/commentisfree/2023/jul/14/ai-artificial-intelligence-disrupt-education-creativity-critical-thinking, accessed 26 October 2024.

Luckin, R., W. Holmes, M. Griffiths and L. B. Forcier (2016), Intelligence Unleashed: An Argument for AI in Education, London: Pearson.

Major, L., P. Warwick, I. Rasmussen, S. Ludvigsen and V. Cook (2018), ‘Classroom dialogue and digital technologies: A scoping review’, Education and Information Technologies, 23 (5), 1995–2028, https://doi.org/10.1007/s10639-018-9701-y, accessed 20 October 2024.

Mitra, S. (2012), Beyond the Hole in the Wall: Discover the Power of Self-Organized Learning, United Kingdom: TED Books.

Mitra, S. (2017), ‘Minimally invasive education’, Thinking Digital Conference, https://www.youtube.com/watch?v=svWynGmBQb0, accessed 14 September 2024.

Muthmainnah, M., P. M. Ibna Seraj and I. Oteir (2022), ‘Playing with AI to investigate human-computer interaction technology and improving critical thinking skills to pursue 21st century age’, Education Research International, 2022 (1), https://doi.org/10.1155/2022/6468995, accessed 28 September 2024.

National Education Union (2024), ‘State of education: Workload and Wellbeing | National Education Union’, https://neu.org.uk/press-releases/state-education-workload-and-wellbeing, accessed 19 September 2024.

National Literacy Trust (2018), ‘Fake news and critical literacy: The final report of the Commission on Fake News and the Teaching of Critical Literacy in Schools’, https://cdn.literacytrust.org.uk/media/documents/Fake_news_and_critical_literacy_-_final_report.pdf, accessed 28 September 2024.

Nguwi, Y. (2023), ‘Technologies for education: From gamification to ai-enabled learning’, International Journal of Multidisciplinary Perspectives on Higher Education, 8 (1).

Ofcom (2022), ‘Children and parents: Media use and attitudes report’, available at: https://www.ofcom.org.uk/siteassets/resources/documents/research-and-data/media-literacy-research/children/childrens-media-use-and-attitudes-2022/childrens-media-use-and-attitudes-report-2022.pdf?v=327686, accessed 10 October 2024.

Ofsted (2019), ‘Teacher well-being at work in schools and further education providers’, https://assets.publishing.service.gov.uk/media/5fb41122e90e07208d0d5df1/Teacher_well-being_report_110719F.pdf, accessed 24 October 2024.

Patrick, R. and A. Javed (2024), ‘AI apps that enhance history teaching’, Agora, 59 (2), 25–28.

Perrotta, C. and N. Selwyn (2020), ‘Deep learning goes to school: Toward a relational understanding of AI in education’, Learning, Media and Technology, 45 (3), 251–69, https://doi.org/10.1080/17439884.2020.1686017, accessed 13 October 2024.

Piaget, J. (1926), The Language and Thought of the Child, Harcourt: Brace.

Pilgrim, J., S. Vasinda, C. Bledsoe and E. Martinez (2019), ‘Critical thinking is critical: Octopuses, online sources, and reliability reasoning’, The Reading Teacher, 73 (1), 85–93, https://doi.org/10.1002/trtr.1800, accessed 24 October 2024.

Pillai, M. S. (2023), ‘Hands-on training on the use of AI in the teaching-learning process: Different modules for online teaching’, Onomazein: Revista de Lingüística y Traducción del Instituto de Letras de la Pontificia Universidad Católica de Chile, 62, 202–22.

Public Health England (2021), ‘Promoting children and young people’s emotional health and wellbeing: A whole school and college approach’, https://assets.publishing.service.gov.uk/media/614cc965d3bf7f718518029c/Promoting_children_and_young_people_s_mental_health_and_wellbeing.pdf, accessed 29 September 2024.

Reid, G. (2017), Dyslexia in the Early Years: A Handbook for Practice, London: Jessica Kingsley Publishers.

Roberts, J. (2024), 'Oak national: We’ve created safe AI that saves teachers time', TES Magazine, https://www.tes.com/magazine/analysis/general/oak-national-academy-lesson-planning-ai-tool-cuts-teacher-workload, accessed 12 October 2024.

Schroeder, K., M. Hubertz, R. Van Campenhout and B. G. Johnson (2022), ‘Teaching and learning with AI-generated courseware: Lessons from the classroom’, Online Learning, 26 (3), https://doi.org/10.24059/olj.v26i3.3370, accessed 18 October 2024.

Selwyn, N. (2019), Should Robots Replace Teachers? Cambridge: Polity Press.

Serholt, S. and W. Barendregt (2016), ‘Robots tutoring children: Longitudinal evaluation of social engagement in child-robot interaction’, Proceedings of the 9th Nordic Conference on Human-Computer Interaction, https://doi.org/10.1145/2971485.2971536, accessed 19 October 2024.

Sperling, K., L. Stenliden, J. Nissen and F. Heintz (2022), ‘Still w(AI)ting for the automation of teaching: An exploration of machine learning in Swedish primary education using Actor-Network Theory’, European Journal of Education, 57 (4), 584–600, https://doi.org/10.1111/ejed.12526, accessed 12 October 2024.

Tuomi, I. (2018), ‘The impact of Artificial Intelligence on learning, teaching, and education. Policies for the future’, Publications Office of the European Union, https://publications.jrc.ec.europa.eu/repository/bitstream/JRC113226/jrc113226_jrcb4_the_impact_of_artificial_intelligence_on_learning_final_2.pdf, accessed 18 October 2024.

Wang, X., P, Chen, D. Yang, A. Hosny, J. Lavonen, (2023), ‘Fostering Computational Thinking through Unplugged activities: a Systematic Literature Review and meta-analysis’, International Journal of STEM Education, 10(1), https://doi.org/10.1186/s40594-023-00434-7 accessed 12 May 2025.

Wilby, P. (2016), ‘Sugata Mitra – the professor with his head in the cloud’, The Guardian, 7 June, https://www.theguardian.com/education/2016/jun/07/sugata-mitra-professor-school-in-cloud, accessed 8 October 2024.

Worth, D. (2021), ‘Is there enough support for mental health in schools?’, TES Magazine, https://www.tes.com/news/mental-health-schools-education-teachers-send-wellbeing-charter, accessed 10 October 2024.

Worth, D. (2024), ‘The AI challenge for teacher training - and what it’s doing about it’, TES Magazine, https://www.tes.com/magazine/analysis/general/will-teacher-training-teach-how-to-use-ai, accessed 23 October 2024.

YoungMinds (2020), ‘School staff warn of the extensive impact of COVID-19 pandemic on young people’s mental health’, available at: https://www.youngminds.org.uk/about-us/media-centre/press-releases/school-staff-warn-of-the-extensive-impact-of-covid-19-pandemic-on-young-people-s-mental-health/, accessed 8 October 2024.